changedetection.io with selenium webdriver is a very powerful tool to monitor for website changes. Just wanted to share a warning for other who are potentially not aware of the mighty traffic this great tool can generate with even a handful of urls.

Why use changedetection.io with selenium webdriver selenium webdriver

Couple days ago I had a use case where I wanted to generate some realistic traffic to a website I work on – to trigger some scenario in code. Nothing nefarious – it’s just curl wouldn’t work for this test as real browser was needed (to execute javascript as well). And I couldn’t just open a browser and refresh the page 10 times – as site stats data was being refreshed every 5 minutes (by design). Which is why I needed a constant stream of realistic traffic (with javascript execution).

I initially wanted to use selenium cluster/grid – but I haven’t had time to set it up properly. However I already had a working docker-compose.yml for changedetection.io.

For those of you that don’t know – changedetection.io is a great little tool to track changes on websites automatically and send notifications on change (it’s great for tracking amazon urls for example – if you are watching some items for price drops). And it supports selenium webdriver natively! – so I figured it would do just fine as a replacement for selenium grid as I only needed to load 10-12 urls into it (and indeed- it worked well and as advertised for this except for the traffic fiasco).

I typically would roll it out on some remote server/vps with near unlimited traffic, but to save time (also chrome webdriver hardware requirements are no joke – especially with concurrency) – I decided to spin it up on a local 8c/16t ryzen home server – and that was my mistake.

If you live in US – internet providers typically cap residential broadband. Some refer to this as “predatory data caps“ – for example my home residential internet is capped with 1 TB of traffic a month and most US providers have similar limits in US. Most consumers simply have no alternative internet providers to run to -which is what makes these caps possible. And I guess I just dint realize just how much traffic my little test would generate lol.

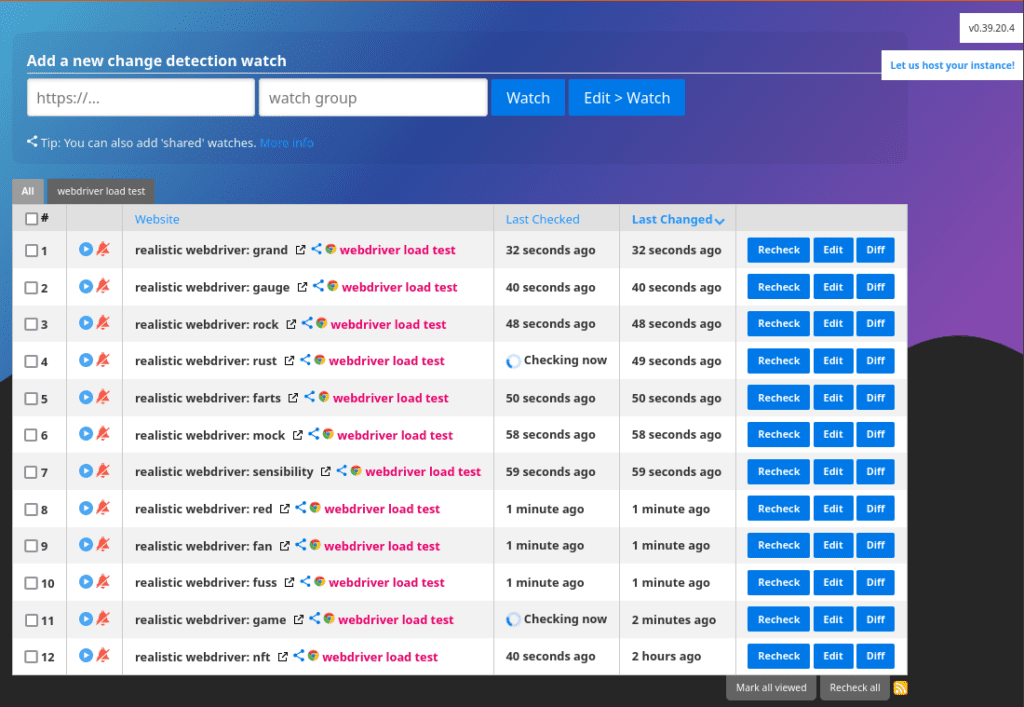

My setup – 12 urls checked every 1 to 4 minutes

I’ve setup changedetection.io to check only 12 urls – but to check them fairly often – between 1 to 4 minutes for each url.

That number and frequency was needed to trigger a certain scenario in website I was working on at the moment.

Because website I was testing is so heavily laden with ads (which means webdriver could hit multiple timeouts and would hang for a while) – It took some modifications to make sure that one webdriver could handle several requests in parallel.

I had to increase max number of concurrent sessions to 16:

#Increasing session concurrency per container

- SE_NODE_MAX_SESSIONS=16

- SE_NODE_OVERRIDE_MAX_SESSIONS=true

And also reduce the timeout interval and number of retries – otherwise webdriver would be hanging due to heavy ads:

# improve timeouts - otherwise ads gonna be loading forever:

- SE_SESSION_REQUEST_TIMEOUT=15

- SE_SESSION_RETRY_INTERVAL=2

Here’s the full changedetection.io docker-compose.yml setup I’ve used

Here’s the docker-compose.yml:

version: "3.7"

networks:

default:

external: true

name: infranet

services:

haproxy:

image: haproxy:lts-alpine

container_name: ${PROJECT_NAME}-haproxy

logging:

driver: "json-file"

options:

max-file: "5"

max-size: "10m"

restart: always

depends_on:

- changedetection

ports:

- ${HOST1}:${CNT1}

volumes:

- ${CERTS_DIR}:/certs

- ${FS_DIR}/haproxy/config:/usr/local/etc/haproxy:ro

changedetection:

image: ghcr.io/dgtlmoon/changedetection.io

container_name: ${PROJECT_NAME}-app

logging:

driver: "json-file"

options:

max-file: "5"

max-size: "10m"

restart: always

environment:

PUID: ${PUID}

PGID: ${PGID}

WEBDRIVER_URL: http://${PROJECT_NAME}-seleniumchrome:4444/wd/hub

volumes:

- ${FS_DIR}/datastore:/datastore

browser-chrome:

shm_size: 8gb

container_name: ${PROJECT_NAME}-seleniumchrome

hostname: ${PROJECT_NAME}-seleniumchrome

image: selenium/standalone-chrome:latest

environment:

- VNC_NO_PASSWORD=1

- SCREEN_WIDTH=1920

- SCREEN_HEIGHT=1080

- SCREEN_DEPTH=24

#Increasing session concurrency per container

- SE_NODE_MAX_SESSIONS=${SE_NODE_MAX_SESSIONS}

- SE_NODE_OVERRIDE_MAX_SESSIONS=true

# add timeouts - otherwise ads gonna be loading forever:

- SE_SESSION_REQUEST_TIMEOUT=15

- SE_SESSION_RETRY_INTERVAL=2

volumes:

# Workaround to avoid the browser crashing inside a docker container

# See https://github.com/SeleniumHQ/docker-selenium#quick-start

- /dev/shm:/dev/shm

restart: always

And here’s the .env file I was using along with it:

#general settings

COMPOSE_PROJECT_NAME=changedetection

PROJECT_NAME=changedetection

TZ=America/New_York

FS_DIR=/mnt/1tb_drive/dockervolumes/changedetection

PGID=1000

PUID=1000

CURRENT_UID=1000:1000

ENV_NAME=hs-edge1

#PORTS

HOST1=31740

CNT1=80

#SELENIUM

SE_NODE_MAX_SESSIONS=16

#HAPROXY

CERTS_DIR=/mnt/1tb_drive/dockervolumes/dnsrobocert/letsencrypt/live/example.com

And haproxy config for the most curious – if you want to set up changedetection.io yourself:

global

# Settings under global define process-wide security and performance tunings that affect HAProxy at a low level.

# Max number of connections haproxy will accept

maxconn 1024

# Logging to stdout preferred when running as a container.

log stdout format raw local0

# Only TLS version 1.2 and newer is allowed:

ssl-default-bind-options ssl-min-ver TLSv1.2

defaults

# Defaults here

# As your configuration grows, using a defaults section will help reduce duplication.

# Its settings apply to all of the frontend and backend sections that come after it.

# You’re still free to override those settings within the sections that follow.

# this updates different proxies (frontend, backend, and listen sections) to send messages

# to the logging mechanism/server(s) configured in the global section

log global

# Will enable more verbose HTTP logging

# Enable http logging format to include more details logs

option httplog

# Enable HTTP connection closing on the server side but support keep-alive with clients

# (This provides the lowest latency on the client side (slow network) and the fastest session reuse on the server side)

option http-server-close

# option httpclose

# Don't use httpclose and http-server-close, httpclose will disable keepalive on the client side

# Expect HTTP layer 7, rather than load-balance at layer 4

mode http

# A connection on which no data has been transferred will not be logged (such as monitor probes)

option dontlognull

# Various response timeouts

timeout connect 5s

timeout client 20s

timeout server 45s

frontend fe-app-combined

mode tcp

bind *:80

tcp-request inspect-delay 2s

tcp-request content accept if HTTP

tcp-request content accept if { req.ssl_hello_type 1 }

use_backend be-app-recirc-http if HTTP

default_backend be-app-recirc-https

backend be-app-recirc-http

mode tcp

server loopback-for-http abns@app-haproxy-http send-proxy-v2

backend be-app-recirc-https

mode tcp

server loopback-for-https abns@app-haproxy-https send-proxy-v2

frontend fe-app-https

mode http

bind abns@app-haproxy-https accept-proxy ssl crt /certs/fullkeychain.pem alpn h2,http/1.1

# whatever you need todo for HTTPS traffic

default_backend be-app-real

frontend fe-app-http

mode http

bind abns@app-haproxy-http accept-proxy

# whatever you need todo for HTTP traffic

redirect scheme https code 301 if !{ ssl_fc }

backend be-app-real

mode http

balance roundrobin

# Enable insertion of the X-Forwarded-For header to requests sent to servers

option forwardfor

#https://serverfault.com/questions/743842/add-haproxy-x-forwarded-host-request-header

http-request set-header X-Forwarded-Host %[req.hdr(Host)]

http-request set-header X-Forwarded-Port %[dst_port]

http-request add-header X-Forwarded-Proto https if { ssl_fc }

# Send these request to check health

option httpchk

http-check send meth GET uri / ver HTTP/1.1 hdr Host haproxy.local

http-check expect status 200-399

server app-backend1 changedetection-app:5000 check

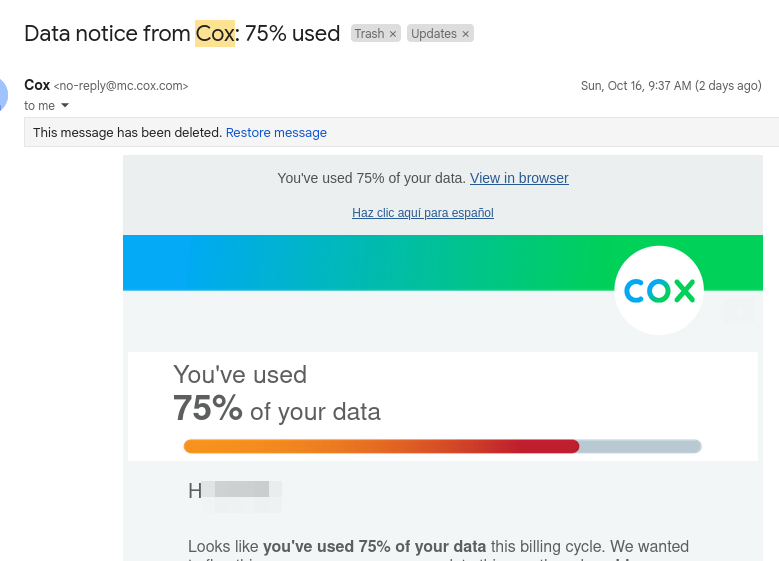

Cox email overage alert

And then I I just left it running – because “why not” I thought.

I was alerted from my slumber with this cox email alert just a couple days later.

After initially thinking on my youtube addiction, or perhaps some heavy other usage – one glance at router traffic analyzer pointed to my amd ryzen homelab server being a traffic hog and then one look at iptraf additionally removed all doubts and pointed to changedetection.io decisively.

I turned off all my changedetection.io tests immediately after while screaming from horror and late disbelief.

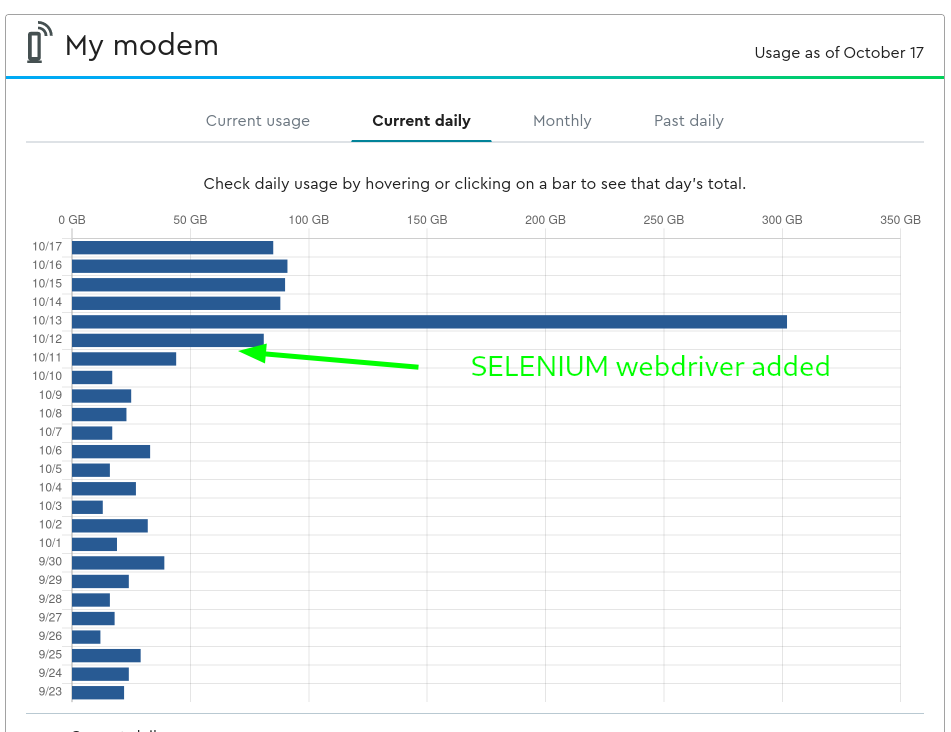

Results: Cox daily internet usage chart

I’ve enabled above selenium test some time on October 11th or 12th – and the traffic graph below shows very evident jump on around then.

And below graph is the result (not sure about spike on 10/13 – could be that I was messing around with my tests more heavily then usually).

Difference is fairly dramatic – and it doubles or triples my usual (already heavy) daily traffic. I could easily cross my internet cap (which is 1Tb a month) in a little more then 10 days, and this is with just 12 urls being tested with chrome webdriver. I don’t know about you – but I certainly underappreciated the traffic it takes to fully load a heavy website with heavy javascript and ads that are then constantly downloading more stuff.

I have no problems updating to unlimited data package or paying overage charge, but not everyone is perhaps that fortunate and the amount of traffic one can incur from such a seemingly innocent test is staggering. Cox overage charges as of October 18th 2022 are 10$ per 50Gb so depending on when you caught that – your bill could bite you.

Lessons learned:

- Selenium webdriver could generate massive amount of traffic – especially if the website you are testing loads a lot of ads and has a lot of images. Make sure you use a real hosted remote server or a vps, as they typically come with unlimited 1Gbps link or at least some heavy traffic packages (8-10 Tb). Or otherwise make sure your residential internet line is uncapped.

- This also seems like a no-brainer issue to me – support legislation banning data caps

Just figured I share my experience and thanks for reading!