You are caching your site in some origin Cache, such as Varnish Cache. For many reasons you may want to configure shorter cache TTL, let’s use 24 hours for this example – this could be for many reasons like:

- Content Freshness – if you update site often you don’t want your visitors seeing old cached page version. (In fact news sites like cnn.com or nytimes.com with content that changes often have much shorter cache TTL – like 5 minutes)

- Dynamic Content – maybe your pages have dynamic content that changes frequently

This means that cached pages will only stay in Varnish Cache for 24 hours. What happens if after 24h another visitor visits a page – visitor request would go to the origin server and response will be re-cached for another 24 hours. Rinse and repeat.

^Problem with this flow – if you have many posts/pages and other long-tail content there is going to be a lot of visitors that would be hitting a cold cache which results in much slow(er) response times, because their requests would have to be taken to a slow origin server (let’s just say it – WordPress is slow).

You don’t need to use your site visitors as cache breaker, you just need to “warm the cache” before visitors hit your site, and keep re-warming it via some cron process.

Below is a simple cache warmer strategy to keep your web application such as WordPress – always warm in cache. This is how I do cache warming using wget2.

Why use cache such as Varnish cache?

- Speed and Responsiveness:

- When users visit your website, their browsers make requests to your server for specific content. Without caching, your server must generate the content dynamically each time, which can be resource-intensive.

- Varnish steps in by intercepting these requests. Upon the first request for a particular piece of content, Varnish forwards it to your web server, which responds with the appropriate data. Varnish then caches this response in memory.

- Subsequent requests for the same content are served directly from the Varnish cache, bypassing the need to hit your web server again. This results in faster page delivery and improved responsiveness for your users.

- Reduced Server Load:

- As your application scales and attracts more users, the sheer volume of requests to your web server can become overwhelming.

- By caching content with Varnish, your server doesn’t need to handle every single request. Instead, Varnish serves cached responses, effectively reducing the load on your server.

- This optimization allows your application to handle a large number of concurrent requests without straining your server resources.

- Massive Performance Boost:

- Varnish’s threading model and memory storage approach make it incredibly fast. It relies on pthreads to manage a vast number of incoming requests.

- When configured correctly, Varnish Cache can make your website up to 1,000 times faster1.

- By leveraging Varnish, you can achieve impressive performance gains without the need for vertical or horizontal scaling.

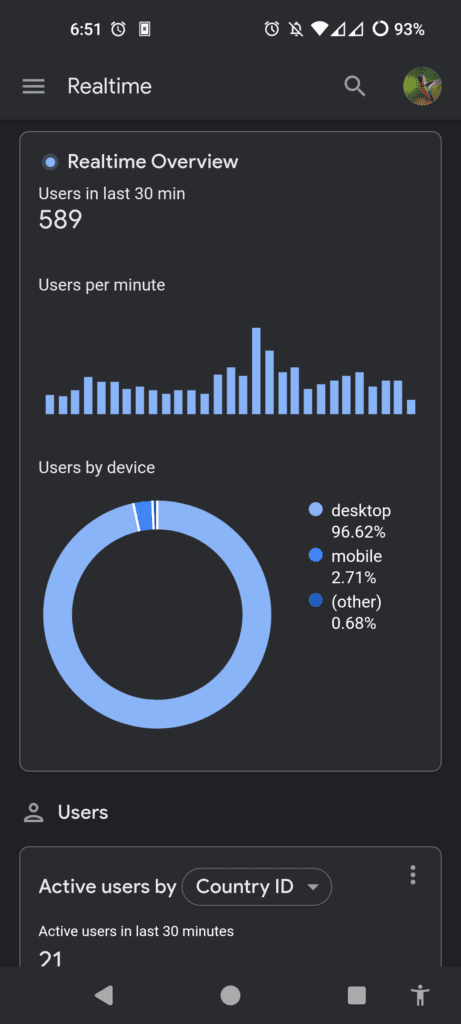

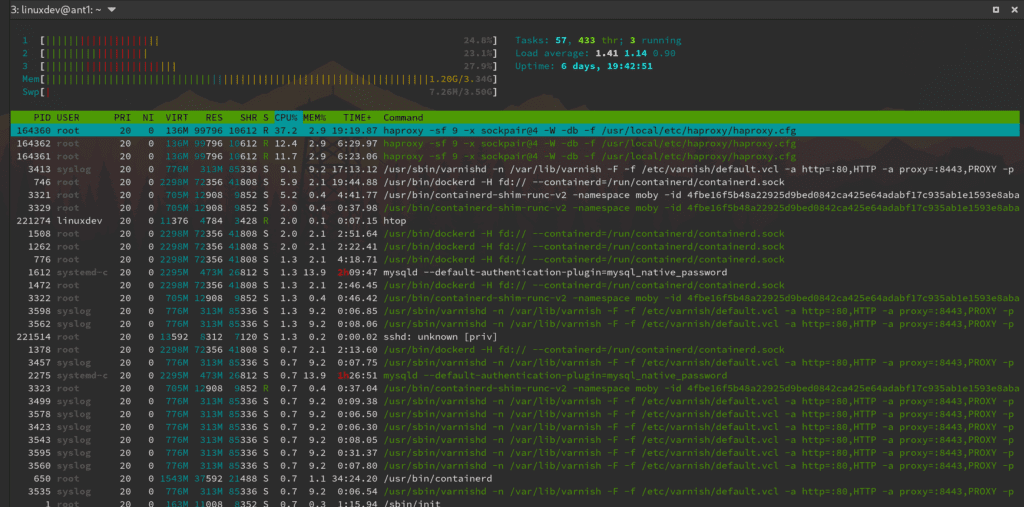

To illustrate – this WordPress blog is hosted on cheap racknerd black friday vps, it’s less than 30$ a year. Yet my WordPress install can handle 600 GA realtime traffic with Varnish Cache easily, barely getting to 30% CPU (and most of that is TLS work with haproxy). With WordPress – this is only possibly with cache.

Why warm the cache?

As explained previously – we don’t want our users to act as our cache warmers .

Every visitor should be getting a super fast response 🚀 regardless of the time of day they visited the site, speed is good, speed is love.

- Faster Response Times:

- Pre-warming the cache involves loading frequently accessed content into memory before actual user requests arrive.

- When a user subsequently requests a page, Varnish serves the cached version instantly, eliminating the need to generate it from scratch.

- Result: Lightning-fast response times for your website visitors.

- Optimized Resource Usage:

- By pre-warming the cache during off-peak hours, you ensure that critical content is readily available.

- This reduces the load on your backend servers when traffic spikes occur.

- Benefit: Efficient resource utilization and improved overall system performance.

But if I use Cloudflare or Cloudfront – do I still need to worry about cache warming?

Yes, it actually worse. Cloudflare and CloudFront have hundreds of POP locations worldwide. When a user requests webpage, Cloudflare or CloudFront serves it from the nearest POP server. However, this distributed approach leads to empty caches in every POP server when the requested content hasn’t been accessed recently3. And by recently – check your cache-control header duration, if your cache-control is 4 hours for example – then the Cloudflare/Cloudfront edge servers will have your contend expired that often, meaning about N number of users will be hitting your (potentially) very slow website directly.

Personally – I don’t care how small the number of people that will hit the cold cache – I just don’t want this to happen at all, which is why I warm cache all times, every times using Varnish Cache.

This is how I warm the cache

Typically I have this code on some server:

Install wget2:

# on debian based

sudo apt-get install wget2

#on rhel based:

sudo dnf install wget2Add this cronjob. I run it every 12 hours because my website cache-control header is set to 24h, which means all my content will be preloaded in Varnish cache at all times. Your cron might be different – check your cache control headers.:

#prewarm bytepursuits:

0 */12 * * * /mnt/480g_drive/projects/cache-warmer/bytepursuits.com/cachewarm-html.sh

Actual prewarmer script:

#!/usr/bin/env bash

#Prod version - works just fine:

wget2 --recursive \

--no-parent \

--level=0 \

--tries=3 \

--timeout=11 \

--spider \

--delete-after \

--header "Accept-Encoding: br" \

--header "x-local-prewarm: Evasion4-Wow-Visibly" \

--user-agent=bp-warming-client \

--secure-protocol=TLSv1_3 \

--limit-rate=0.1M \

--reject '*.js,*.css,*.txt,*.gif,*.jpg,*.jpeg,*.png,*.mp4,*.mp3,*.woff2,*.pdf,*.tgz,*.flv,*.avi,*.mpeg,*.iso' \

--ignore-tags=img,link,script \

--domains=www.bytepursuits.com,bytepursuits.com \

https://bytepursuits.com

# OPTIONAL: Notify the healthchecks api - I use healthchecks to know if my crawler script didn't run as expected.

curl -fsS -m 10 --retry 5 -o /dev/null https://healthchecks.io/ping/0dea0ed1-0175-icb4-a262-9521f77c67f2

What is going on here

wget2 is a modern version of wget. Basically wget2 is a wget on steroids – with cleaner code, multi-threading parallelization and bunch of other features I’m not going to list. Wget2 will crawl your site and execute GET requests for every single url it finds, – we will use this capability to “crawl” entire website to warm the cache.

- –no-parent – Do not ever ascend to the parent directory when retrieving recursively. This is a useful option, since it guarantees that only the files below a certain hierarchy will be downloaded.

- –level=0 Specify recursion maximum depth level depth.

- –tries=3 retry couple of times if there is an error (this won’t retry on 404 or connection refused as it shouldn’t, – as those are more of permanent errors)

- –timeout=11 wait for 11 seconds before giving up

- –spider Web spider mode, which means that it will not download the pages, just check that they are there using GET

- –delete-after delete every single file it downloads, after having done so.

- –header “Accept-Encoding: br” – this is tricky. Proxy caches such as Varnish cache would cache pages with different Accept-Encoding value separately. So you may want to run the warmer script for –header “Accept-Encoding: gzip” and –header “Accept-Encoding: br”. See more notes below.

- –header “x-local-prewarm: Evasion4-Wow-Visibly” – this is super important. explained separately below

- –user-agent=bp-warming-client – just a unique user agent so I can tell normal human requests from warmer crawls in my logs

- –secure-protocol=TLSv1_3 you probably don’t need it – it will default to something sensible, but my site supports TLS 1.3 so I set it to TLS 1.3

- –limit-rate=0.1M – wget2 is multi-threaded and can really really load your site (DDOS like). limit rate to not DDOS yourself.

- –reject ‘.js,.css,.txt,.gif,.jpg,.jpeg,.png,.mp4,.mp3,.woff2,.pdf,.tgz,.flv,.avi,.mpeg,.iso’ – imo you likely shouldn’t be caching the media files in varnish cache, as they could just be too big to be stored in cache. You also want to configure these assets to have cache-control: s-maxage=0, so Varnish cache doesn’t store these and instead they are served by your web server like nginx directly.

- –ignore-tags=img,link,script – don’t follow the urls in these tags

- –domains=www.bytepursuits.com,bytepursuits.com – only crawl your domain, ignore any links to other sites

What makes this crawling warm the cache?

Just by crawling the site with wget2 – this won’t update the cached version of the page automatically if the page already cached. To update the cache – you would need to configure your caching software (in my case – Varnish Cache) to pass these crawls to the origin server.

This is how to do this in Varnish Cache ( you would need to add this in vcl_recv):

if (req.http.x-local-prewarm && req.method == "GET" && req.http.x-local-prewarm == "Evasion4-Wow-Visibly") {

set req.hash_always_miss = true;

unset req.http.x-local-prewarm;

}

^ Here we are only caching GET requests that have x-local-prewarm header present with some password-like value.

–header “x-local-prewarm: Evasion4-Wow-Visibly” – acts as an indication to the Varnish server that this request has to be passed to the origin. Additionally – it’s kind of like a password so internet randos cannot DDOS us if they accidentally find the x-local-prewarm header.

This Varnish config: set req.hash_always_miss = true; -> instructs Varnish Cache to always hit the origin server, re-cache the response and reset the TTL.

Accept-Encoding header and caching. Normalizing Accept-Encoding

Requests with different Accept-Encoding header values are cached separately.

- Accept-Encoding: gzip, deflate, br

- Accept-Encoding: deflate, gzip, br

- Accept-Encoding: br, gzip

- Accept-Encoding: gzip, br

- Accept-Encoding: br

- etc

^ Which means if user browsers send these values with requests we will have as many versions of the cached page in the Varnish cache. Not only this is ineffective use of resources but also causes problems with warming the cache – now we have to warm every single variation of Accept-Encoding. This is clearly not good.

What you can do – you can normalize the Accept-Encoding value in varnish:

# 8. Accept-Encoding normalization

if (req.http.Accept-Encoding) {

if (req.url ~ "\.(jpg|jpeg|webp|png|gif|gz|tgz|bz2|tbz|mp3|ogg|swf|avi|flac|mkv|mp4|mp3|pdf|wav|woff|woff2|zip|xz|br)$") {

# These are already compressed, brotli/gzip wont help here

unset req.http.Accept-Encoding;

} elseif (req.http.Accept-Encoding ~ "br") {

set req.http.Accept-Encoding = "br";

} elseif (req.http.Accept-Encoding ~ "gzip") {

set req.http.Accept-Encoding = "gzip";

} else {

# unknown algorithm (aka bad browser maybe? ddos attack? who knows)

unset req.http.Accept-Encoding;

}

}

^ remove Accept-Encoding header for precompressed files, if Accept-Encoding has br – just set Accept-Encoding to br, then if Accept-Encoding has gz – just set Accept-Encoding to gz.

This way we will only have 3 possible variations to worry about:

- Accept-Encoding: br

- Accept-Encoding: gzip

- no Accept-Encoding

However – brotli support is at 97% and is supported by all major browsers, according to caniuse. Which means I can reasonably expect people to always have brotli in their request’s Accept-Encoding value, which means It’s completely fine to warm the cache just for brotli – which is why I just use this for wget2 crawling: –header “Accept-Encoding: br. However you might want to also pre-warm for gzip, then run separate crawl with : –header Accept-Encoding: gz

Why no deflate? deflate is dead in my view. There is this November 2021 study from Web Almanac where a staggering 0.0% of servers returned a deflate-compressed response, it is a change from Web Almanac 2020 study where 0.02% of servers were supporting it. I would not bother supporting it on the server side.

Specifics of my setup – I compress brotli and gzip in Nginx

I prefer to do the brotli and gzip compression in Nginx instead of Varnish, and because of that I explicitly disable gzip compression in Varnish which is otherwise on by default. Brotli support is not enabled by default in Varnish – I believe if you want it you need a brotli vmod which is enterprise-only vmod. Why do I do this? I want brotli at all times and I’m more familiar with Nginx way of doing brotli compression, whereas Varnish is then only used for caching. Here’s a long article I wrote on how to configure brotli with gzip fallback in nginx.

To disable the automatic gzip I run my varnish cache container like this:

command: -p feature=+http2 -p http_gzip_support=off

Other gotchas

Check your site’s response Vary header, there could be other values your responses “vary on”. What this means for caching is – you would need to warm cache for all possible combinations of values in headers listed in Vary header.

Thank you for reading this cache warming article. Special thank you to Guillaume from Varnish team who gave me the idea of using wget for cache warming – btw their discord server is super helpful. This approach was working rock solid for me for couple of years now.